10 Sep, 2023

As businesses evolve in the digital age, the need for quick, accurate, and efficient data retrieval and problem-solving has never been more crucial. One tool that’s making waves in this regard is ChatGPT, a conversational agent based on the GPT-4 architecture. One may have heard a lot of precautions on accuracy of the data in ChatGPT so this is and a lack of importan business or domain context are the major impediments for more businesses to start making more value of ChatGPT in the organizations. But how does ChatGPT gain the information it needs to answer questions or perform tasks? This is where the integration of a knowledge base comes in.

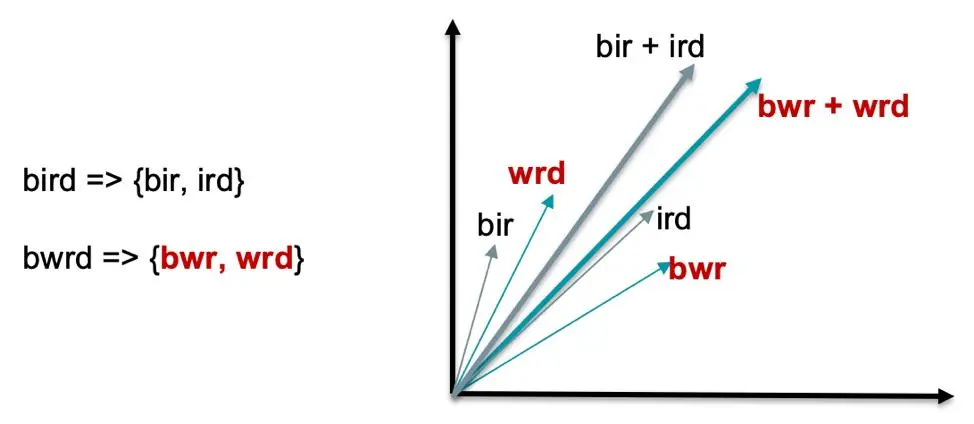

As we delve deeper into the workings of ChatGPT, it’s important to note the core principle of its functioning: the usage of embeddings. Embeddings are used to translate text into high-dimensional numerical vectors that encapsulate both semantic and syntactical meaning. This is pivotal for training the neural network to create contextually appropriate and coherent responses. Here’s how it works:

The implications of incorporating ChatGPT into your business’s internal database or knowledge base can be substantial. It could significantly enhance your

Moreover, this article aims to dive into the practical aspects of using ChatGPT, its accuracy, cost, and potential risks. We’ll also discuss how ChatGPT can be integrated with your company’s SharePoint, Google Drive, Confluence or any other source of the knowledgebase and how you can utilize it on custom data.

ChatGPT, a Large Language Model (LLM), is frequently sought after to augment a company’s knowledgebase. However, employing this LLM effectively requires a clear understanding of the concepts of training, prompting and vector embeddings, and how they influence the integration of ChatGPT.

With the aim of an enhanced database, there’s a misconception that uploading an encyclopedia-like amount of data as a part of the training process will reshape the model’s understanding. Yet, this is far from the truth. ChatGPT’s training is directed at a general context awareness, not memorizing specific knowledge sources or databases. This key aspect balances the practical use of ChatGPT with the necessary caution in handling enterprise knowledge.

Training in Language Models like ChatGPT refers to the initial phase where a model learns by ingesting and processing vast amounts of text data. The primary objective is to enable the model to generate coherent and contextually accurate responses when prompted with queries. During the training phase, the model learns patterns, associations, and structures in the text data, allowing it to make educated guesses when faced with new, unseen text.

There is a common misconception that uploading a single comprehensive document like an encyclopedia or a company’s organizational structure will make the model an “expert” in that domain. However, this is far from the truth.

Focused Effort: The initial training and fine-tuning are more about a focused effort, involving multiple examples to help the model understand and label unseen instances. A single document is not sufficient for this.

Domain-specific Information: When it comes to understanding unique, domain-specific data, a one-off upload doesn’t help the model to deeply understand the nuances.

Myth-busting: Just like a model won’t comprehend a company’s organizational structure based on a single document, uploading an encyclopedia will not make it an expert on every subject in the encyclopedia.

Prompting, on the other hand, refers to the queries or statements used to trigger a model into generating a specific response. These prompts can be finely tuned to get the most relevant and accurate output from the model. Prompting becomes especially crucial in the application phase where you engage the model in real-time or batch tasks.

Vector embeddings in Language Models (LLM) like ChatGPT refer to the representation of words, phrases, or even entire sentences as vectors in a continuous mathematical space. This enables the model to understand semantic similarity between different pieces of text, and forms the basis of the model’s ability to generate coherent and contextually relevant text. Each word or piece of text is transformed into a series of numbers that capture its meaning and context within the dataset the model was trained on. Words that are contextually or semantically similar will have vector representations that are close to each other in this multi-dimensional space.

For further details, you can refer to our article “AI Model Training or Prompt Engineering? Cost-effective Approach”, which elaborates on why prompt engineering could be a more efficient way to get the desired outputs. In many cases, you don’t need to go through the resource-heavy process of retraining the model. Instead, careful crafting of prompts can lead to equally satisfying results.

When it comes to deploying ChatGPT in a business environment, the approach can differ based on specific needs, existing tech stacks, and budgets. Here are some options to consider for a seamless and effective deployment:

With custom development, you have the option of building your chatbot interface from scratch, integrating it with Open AI API.

Basically this option provides the great flecibility allowing to plug in literally any DB to ChatGPT, starting from specific files, websites, cloud storage (Shareporint, Google Drive, Dropbox) or parse web results.

This would require a proprietory development and may take up to a month for the first version to start.

Pro: Total Control and Customization

Con: Time-Consuming and Resource-Heavy

Cost and Timelines

Note: For specific numbers, it’s advisable to inquire for a proposal. It’s free and will give you a detailed estimate.

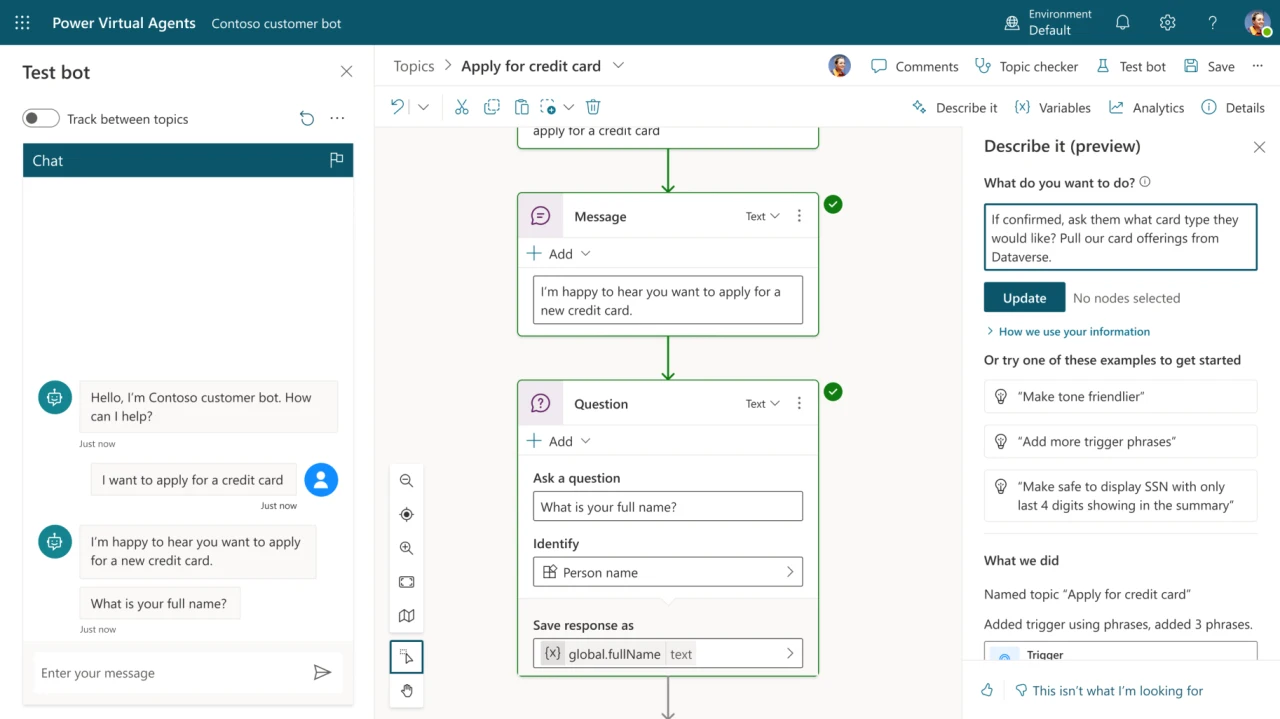

ChatGPT can be integrated directly into SharePoint using Microsoft’s Power Virtual Agents.

Power Virtual Agents lets you create powerful AI-powered chatbots for a range of requests—from providing simple answers to common questions to resolving issues requiring complex conversations.

Open bots panel and then click on new Bot. Enter name, select language, and Environment and then press create. This will spin up a new power virtual agent bot. Note: It takes few minutes for the bot to setup.

Microsoft has added Boosted Conversation to Power Virtual Agent. You can link an external website and the Bot will start generating answers if it couldn’t find any relevant topics. Now, the improved version supports up to 4 public websites and 4 internal Microsoft websites (SharePoint sites and OneDrive).

Pro: Easy Integration with Microsoft Ecosystem

Con: Limited to SharePoint Capabilities

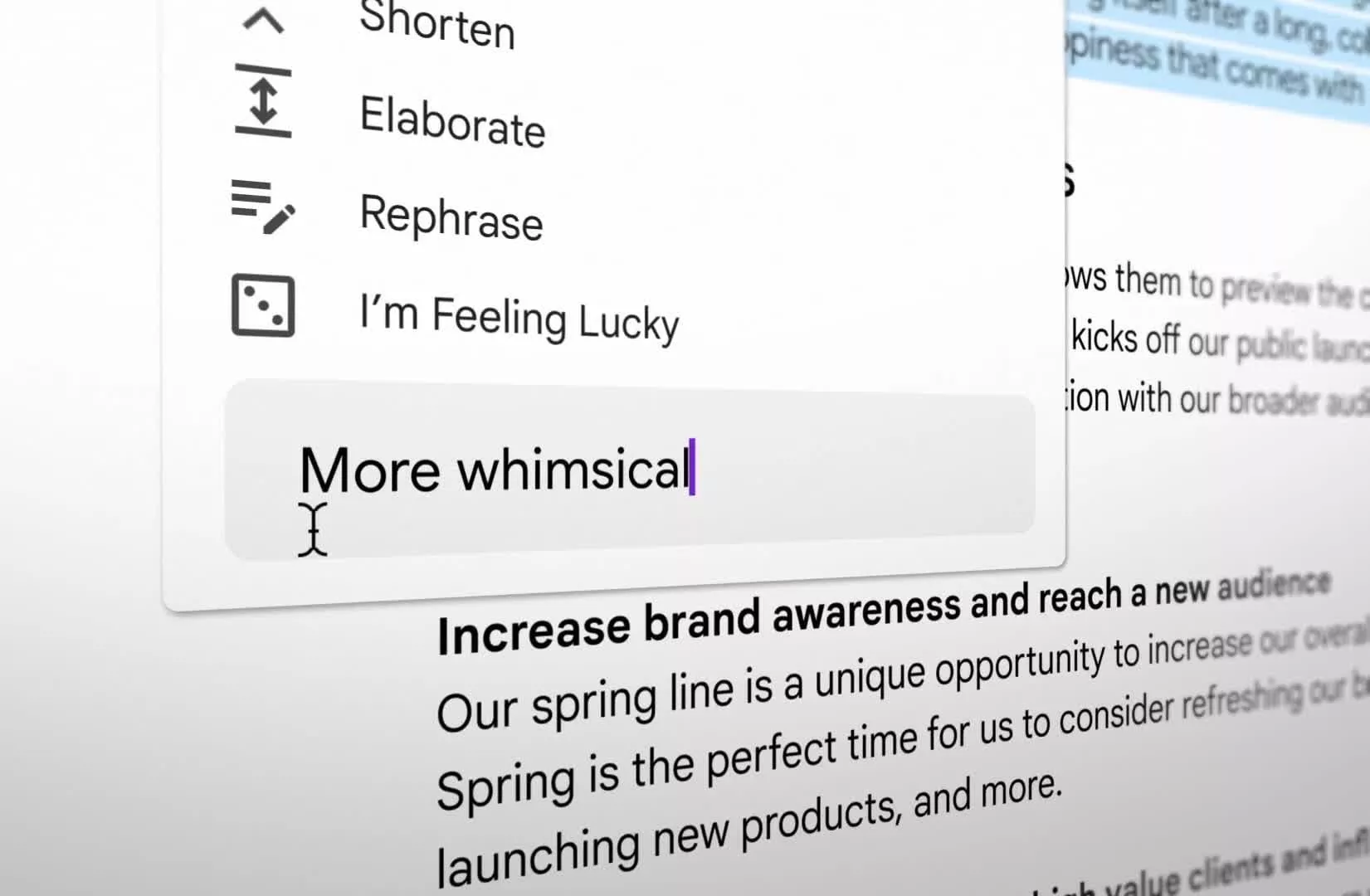

While the process of integrating ChatGPT with Google Docs requires some technical acumen, it can be incredibly beneficial. By creating an App Script code with a unique OpenAI API key, you can utilize the powers of ChatGPT within Google Docs.

Add-ons like GPT for Sheets and Docs, AI Email Writers, and Reclaim.ai can assist you in fusing AI into Google Workspace. These can dramatically change the way you interface with Google Docs, improving productivity and enhancing text creation and editing functionality. But it does not currently really leveraging all the data one have on Google Drive in ChatGPT.

Google hinted the assistant that would read from Google Drive service on it’s latest I/O presentation but no details are provided yet.

Pro: Seamless with Google Services

Con: Less Customization

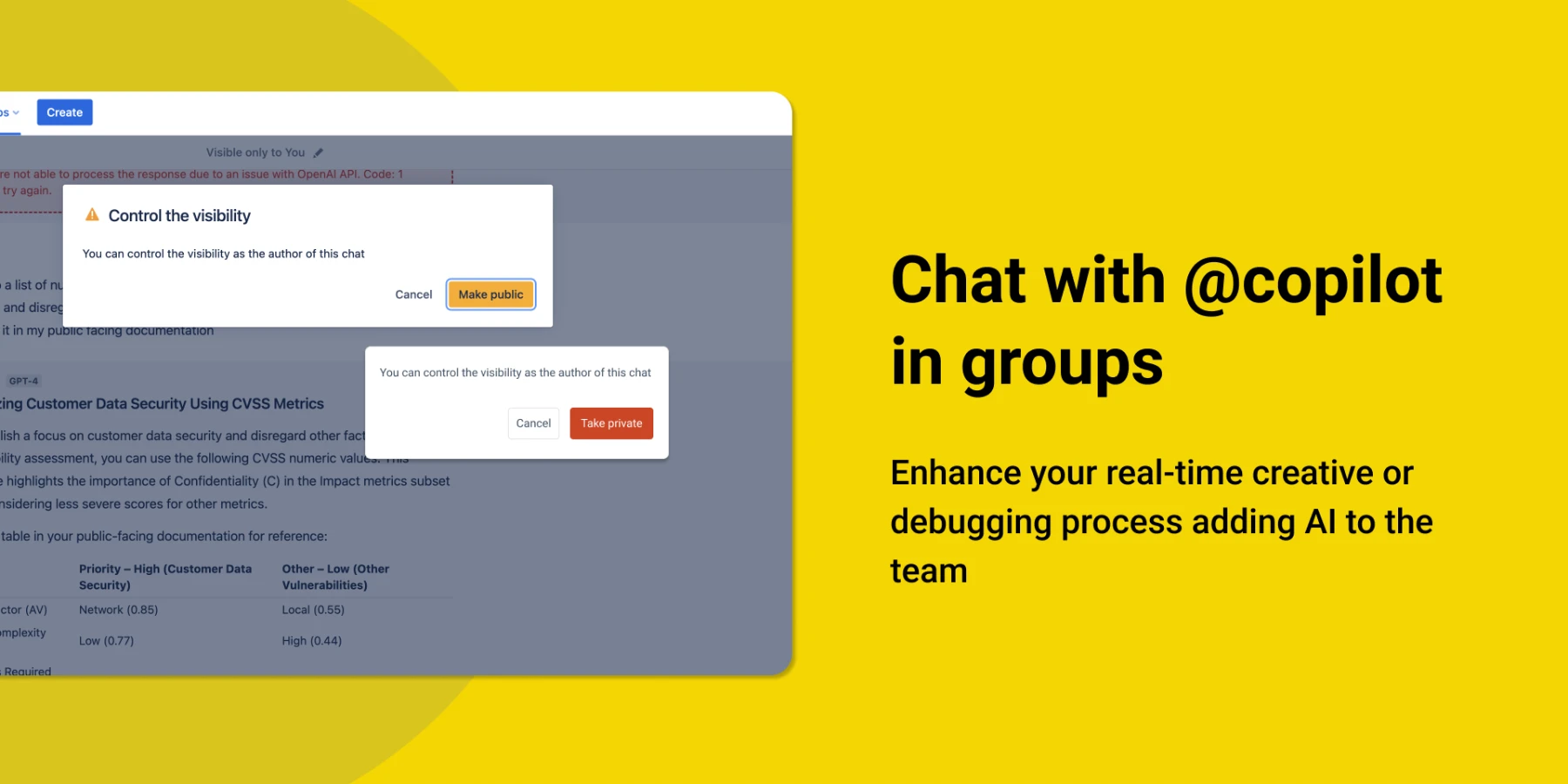

You can utilize third-party plugins like Copilot: ChatGPT for Confluence to integrate ChatGPT into Confluence Knowledgebase.

This option offers easy plug-n-play solution but is limited to the specific knowledge base systems, like Atlassian Confluence.

Pro: Seamless with Confluence

Con: Data Limitations

In summary, the best deployment option will depend on your existing ecosystem, the resources you have at your disposal, and the specific use-cases you have in mind for ChatGPT. Each approach comes with its own sets of pros and cons, and understanding these can help you make an informed decision.

In the rapidly evolving business landscape, timely access to accurate and essential information is crucial to driving success. One such consulting firm realized the need to enhance its knowledge management system for consultants, enabling faster responses and service delivery to its clients.

In the prior system, the consulting firm faced challenges in quickly retrieving and interpreting extensive regulations needed to provide adaptable solutions to its customers. For instance, new regulations’ interpretations or comparisons between distinct jurisdictions often took more time than a typical client was eager to wait. This lag in the response time began affecting customer satisfaction rates and contributed to a reduction in return businesses.

In response to these challenges, the firm engaged PDLT, an AI consulting company skilled in building MVPs (Minimum Viable Products) within less than three months. The AI consulting firm swiftly went to work and developed a proprietary system that indexed hundreds of terabytes of diverse documents.

The system used vector embeddings to process information effectively, transforming the vast quantity of unstructured text into high-dimensional numerical vectors. This enabled more efficient text retrieval and understanding. Once the vectorization was complete, the owned data was embedded as a company-specific knowledgebase in a web application built on OpenAI, creating a unique “chatgpt knowledgebase”.

PDLT utilized the the best practices for the User Experience of the chatbots, enabling consultants to access vital information anytime they need.

The results were noticeable and immediate. After the first iteration of the project was delivered within a month, the consulting firm began experiencing its transformative impact. Junior consultants began to provide senior-level advice due to the readily available information, and the time to the first response saw a significant improvement.

In essence, this AI-empowered knowledgebase allowed the consultants to deliver agile and accurate solutions to the clients, furthered by the remarkable abilities of ChatGPT in understanding, processing, and providing relevant results.

Your business’s knowledgebase is a valuable repository of information that supports both your internal teams and external customers. However, traditional searching and information retrieval methods can feel cumbersome and outdated. By integrating an advanced AI like ChatGPT into your knowledgebase, you can modernize the way users interact with your information, making it easier and more intuitive to find what they need.

Here is how to proceed:

1. Identification of Core Knowledge Areas:

Identify the key domains of knowledge within your business. These could range from product details, troubleshooting guides, business strategies, or any other crucial information needed for daily operations.

Example: If you run an IT service company, your core knowledge areas could include hardware requirements, software troubleshooting, client relationship management, and cybersecurity protocols. ChatGPT can enhance the searching and retrieval process from the volume of guides, manuals, and past queries stored in your knowledgebase.

2. Define the Risks and Required Access Levels:

Ensure that sensitive data within your business is properly safeguarded. Not every piece of information should be accessible by every user. Design different access levels based on roles, departments, or security clearance within your organization.

Example: Your product development process might be confidential, so you’ll restrict access to only members of the product team. In contrast, generic troubleshooting guides for common technical issues could be widely accessible to all staff and even customers.

3. Limit the Knowledgebase Indexing to Respective Access Levels:

With access levels defined, apply these restrictions to your knowledgebase indexation. That way, ChatGPT can serve relevant data based on an individual user’s access level rather than showing results they are not authorized to view.

Example: When a customer service executive asks ChatGPT a question regarding available software updates, they receive a response relevant to their level of access - information that’s safe to share with customers. Simultaneously, an engineer receives more in-depth and technical data about the software update because their access level permits so.

4. Integrate ChatGPT with Existing Information Systems:

Depending on your existing information management platforms, like SharePoint, Confluence, or Google Drive, your method of ChatGPT integration will differ.

Structuring your company’s knowledgebase benefits you through:

These steps might seem overwhelming at first, but remember, you’re not alone in this journey. AI consulting firms like Pragmatic DLT (PDLT) can help guide you through the entire process of setting up ChatGPT for your knowledgebase in less than three months. By leveraging this game-changing AI, you’ll be creating a significantly more efficient and effective knowledge management process for your business.

Pragmatic DLT (PDLT) focuses on leveraging cutting-edge technologies such as AI to streamline various business processes, enabling companies to rapidly develop a minimum viable product (MVP) in less than three months. Below are some of the key areas where PDLT’s expertise can be highly beneficial.

AI Chatbots: PDLT can help in the development of AI-driven customer service chatbots capable of answering FAQs, solving common issues, and even upselling products, thereby freeing up human resources for more complex tasks.

Automated Ticketing System: With AI, it’s easier to categorize, prioritize, and assign customer queries to the relevant departments.

Sentiment Analysis: AI can analyze customer interactions and feedback to gauge overall sentiment, helping in proactive service adjustments.

Automated Training Modules: PDLT can help create AI-driven training modules that adapt to the learning pace and style of individual new hires.

Document Verification: AI can automate the cumbersome process of document verification, ensuring a faster and more accurate onboarding.

Resource Allocation: AI algorithms can predict the resources a new hire might need based on the department, job role, and past data.

Predictive Analytics: PDLT’s expertise in AI can be used for developing predictive models that analyze past and current data to forecast future trends.

Risk Assessment: AI can evaluate potential risks in various business decisions, providing a quantitative measure of risk factors.

Market Research: AI can sift through vast amounts of market data to identify opportunities or threats faster than traditional methods.

In conclusion, the adoption of AI in these sectors can drastically improve efficiency, customer satisfaction, and ultimately, profitability. PDLT can be your ideal partner in this journey, offering tailor-made solutions that fit your business needs.

ChatGPT’s potential for business optimization is evident, particularly in data retrieval and problem-solving. Through various integration options—custom development, Power Virtual Agents for SharePoint, Google AI Labs for Google Drive, and Atlassian Confluence—the technology offers flexibility in deployment tailored to business needs and existing ecosystems.

Key Takeaways:

Data Accuracy: While concerns exist, coupling ChatGPT with a robust knowledge base can mitigate them.

Practical Use-cases: ChatGPT can enhance customer service, automate troubleshooting, and provide real-time decision support, among other applications.

Training and Prompting: No magic bullet; effective use demands understanding of training, prompting, and vector embeddings.

Cost and Time: Implementation timelines and costs vary; ballpark figures start at around $5,000 and one month.

Risks and Access Levels: Attention to data sensitivity and access levels is non-negotiable.

Consulting Options: Specialist firms can expedite and de-risk implementation.

In sum, ChatGPT offers a viable path to augmenting business operations, but the journey demands planning, expertise, and oversight.

Stan and his team are expert blockchain developers with excellent full stack experience and I can't recommend them enough! The work is high quality, communication is excellent, and they are available at all times to connect live to discuss key project decisions in real time and to provide regular progress updates. For someone with limited development experience, Stan was also very willing to help walk through key architecture decisions in a way that was easy to understand and that made the process very smooth. In addition to being great at blockchain development, Stan and his team also have excellent startup business experience and we regularly had in-depth strategy discussions where Stan served as a trusted advisor to brainstorm product features, customer segmentation, go to market, and launch strategy for a blockchain startup. If you're looking for an excellent blockchain development team, full stack development resources, or a trusted startup advisor, these are the guys!

Read full testimonial

When initially looking for a team for this build the interview process was paramount. within minutes Stan had me at a comfortable spot knowing we would be in the best care, we have been working together for months. this team & stan especially are top tier. we have had hard thought discussions around smart contract development, optimization, payments, routing of transactions, ecosystem architecture, design. this team solves problems so you wont have to. apart from being entirely flexible im at ease knowing the team is efficient with top quality full stack work.

Read full testimonial

Michael is very knowledgeable in his area. He delivered the work within a very quick time period but equally took time to provide the necessary support that came with building the decentralised app script. I would recommend him to any other client on upwork. I just hope that he doesn't get soo much work that he can't work with me again.

Read full testimonial

Great communication from the team. The finished Coinbase Wallet reader script works great and we were able to adapt it to our specific needs.

Read full testimonial

The platform met our expectations. Pragmatic DLT's team provides transparent communication, and are skilled experts in blockchain. Their effective project management and responsiveness have facilitated the potential for a long-term partnership.

Read full testimonial

Great communication and attention to detail, the design process went really smoothly and the results were excellent.

Read full testimonial

Michael and team completed backend development of my web3 app in 1 week where it took months for others to complete the same work. The quality was high and well-documented. This team is my go-to resource for future blockchain development.

Read full testimonial

Very uncomplicated to work with. Did the job well and exactly as requested.

Read full testimonial

Pragmatic DLT offered helpful, transparent advice about blockchain technology and data security. Their clear communication and efficient Agile methodology set them apart.

Read full testimonial

They ultimately surpassed our initial expectations. The MVP was delivered within the agreed timeframe and scope, and the design that Pragmatic DLT implemented processes one order per second for more than 2,000 worldwide merchants. The team had a proactive approach, was responsive to the client's needs, and took ownership of their work

Read full testimonial

Everything was very good! The team managed the engagement effectively and fixed errors prior to the launch. Moreover, their resources were highly competent. Overall, the project was a success.

Read full testimonial

Michael and the team did an amazing job! I am pretty demanding customer and the project was not a trivial one. Mike, however, jumped on the task and a) Captured my requirements really well. Guys put it on paper and in the meantime I happen to better understand what I wanted than ever before. I am so grateful they saw my perspective and put it in a perspective of technical viability. b) Delivered very clear and concise architecture. Now I am very clear on how the big project may look and how much will it cost. Finally the thing I am grateful the most. Guys didn't push me to buy the development, they really rose the challenges and questions of viability in a manner I would never be able to do myself. And, effectively, talked me out of the project! They are true professionals and I can now fully trust that they would not sell me solutions they don't believe in. Absolutely recommended!

Read full testimonial